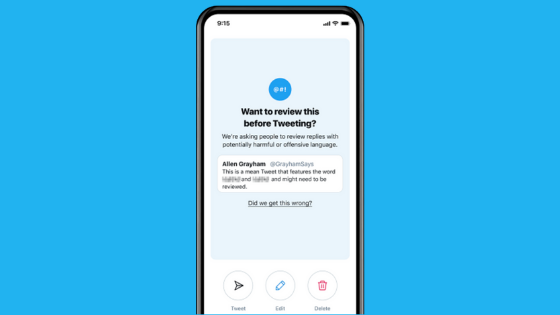

After recently re-launching its test of warning prompts on tweet replies that contain potentially offensive remarks, Twitter is now rolling out a new, updated version of the prompts, across both iOS and Android, which utilise an improved detection algorithm to avoid misidentification, while they also aim to provide more context and options to help users better understand what the warning alerts mean.

The new version of the prompt aims to be more helpful and polite, while also getting better at determining the meaning behind tweets.

As explained by Twitter:

“In early tests, people were sometimes prompted unnecessarily because the algorithms powering the prompts struggled to capture the nuance in many conversations and often didn’t differentiate between potentially offensive language, sarcasm, and friendly banter. Throughout the experiment process, we analysed results, collected feedback from the public, and worked to address our errors, including detection inconsistencies.”

That’s lead to a significant increase in performance throughout the initial test pool, which has now given Twitter the confidence to expand the option,

The updated offensive reply algorithm will now factor in things like the nature of the relationship between the author and replier (e.g. how often they interact) and will include better detection of strong language, including profanity, while the prompts themselves will now also provide more options to let users glean more context and provide feedback to Twitter about the alert.

Twitter says that, specifically, 34% of users in the initial tests for the new prompts ended up revising their initial reply, or decided to not send their reply at all, while users also, on average, posted 11% fewer offensive replies in future after being served the alert.

This hones in on a key element within the broader online engagement space, with research conducted by Facebook showing that basic misinterpretation plays a key role in exacerbating angst, with users often misunderstanding responses in exchange, sparking unintended conflict.

By adding an element of friction here, in simply asking users to re-assess their reply, that may well be enough, in many cases, to avoid further negative impacts, improving on-platform discourse overall.

Which Twitter has been doing much better on of late. Long known for its toxicity, Twitter has made conversational health a much bigger focus, which has made the platform, more generally, a more open and welcoming space.

There are, of course, still significant concerns on this front and it’s a battle that will likely never be ‘won’, as such, but small prompts and tweaks like this are playing a role in improving the Twitter experience for many.

Want to be the first to the latest news in social media? Subscribe to our email newsletter and we’ll send the latest updates straight to your inbox every Friday morning.

7 May 2021

Social Media>Twitter

Twitter Launches Updated Offensive Comment Alerts

After recently re-launching its test of warning prompts on tweet replies that contain potentially offensive remarks, Twitter is now rolling out a new, updated version of the prompts, across both iOS and Android, which utilise an improved detection algorithm to avoid misidentification, while they also aim to provide more context and options to help users better understand what the warning alerts mean.

The new version of the prompt aims to be more helpful and polite, while also getting better at determining the meaning behind tweets.

As explained by Twitter:

“In early tests, people were sometimes prompted unnecessarily because the algorithms powering the prompts struggled to capture the nuance in many conversations and often didn’t differentiate between potentially offensive language, sarcasm, and friendly banter. Throughout the experiment process, we analysed results, collected feedback from the public, and worked to address our errors, including detection inconsistencies.”

That’s lead to a significant increase in performance throughout the initial test pool, which has now given Twitter the confidence to expand the option,

The updated offensive reply algorithm will now factor in things like the nature of the relationship between the author and replier (e.g. how often they interact) and will include better detection of strong language, including profanity, while the prompts themselves will now also provide more options to let users glean more context and provide feedback to Twitter about the alert.

Twitter says that, specifically, 34% of users in the initial tests for the new prompts ended up revising their initial reply, or decided to not send their reply at all, while users also, on average, posted 11% fewer offensive replies in future after being served the alert.

This hones in on a key element within the broader online engagement space, with research conducted by Facebook showing that basic misinterpretation plays a key role in exacerbating angst, with users often misunderstanding responses in exchange, sparking unintended conflict.

By adding an element of friction here, in simply asking users to re-assess their reply, that may well be enough, in many cases, to avoid further negative impacts, improving on-platform discourse overall.

Which Twitter has been doing much better on of late. Long known for its toxicity, Twitter has made conversational health a much bigger focus, which has made the platform, more generally, a more open and welcoming space.

There are, of course, still significant concerns on this front and it’s a battle that will likely never be ‘won’, as such, but small prompts and tweaks like this are playing a role in improving the Twitter experience for many.

Want to be the first to the latest news in social media? Subscribe to our email newsletter and we’ll send the latest updates straight to your inbox every Friday morning.

Related articles...

2025: The Year Social Media Transforms—What’s Ahead?

Your Guide To Boosting Social Media Engagement

Boost Your Brand with Social SEO

Why Has Threads Stuck Around? The Social Media App Revolution Explained